Datacenter decays like everything else

One would easily presume a datacenter doesn’t decay the way say a factory, or 3d printing mass production factory would. But they do, just like any other factory. Things break, things get old, legacy to carry around.

This is why datacenter overhauls are required every now and then, and are actually liabilities not assets in by themselves. They require a lot of money to build, maintain and operate; But rarely produces any revenue by themselves.

Just like a house you buy, most people think their own house (or apartment) is an asset to them, but they are not. They do not produce anything by itself and require constant maintenance and upkeep, otherwise they depreciate quickly to zero value. Hence they are financially liabilities, not assets. This same holds true for any factory, including datacenters.

Simply put; Even datacenters get old and need “renovation” now and then. And it’s more often than one would think.

What is the purpose of a datacenter?

Purpose of a datacenter is to house baremetal servers, which are the assets it enables. They are the infrastructure you can build rest upon, put your servers and build further services, such as seedboxes on them.

All servers need electricity, cooling, networking, security, shelter from the elements and so forth, the datacenter’s job is to provide these elements, nothing more and nothing less.

Decay? What Decay Could Brick Walls or copper wiring have?!

It’s not just the brick walls; Which do decay as well by the way, but all the tech and legacy build up over time that decays.

Legacy builts up, real fast

One of the fastest decay is perhaps the legacy built up over time, extra fiber or copper going here and there, and forgotten to the history of time. Ad hoc upgrades to get this thing working right now since customers are down etc. and then long forgotten in to history. Legacy is the thing that gets built, when you build new things, for example the individual network cable runs, which get added more and more over time. Perhaps the server/aggregation switch gets removed or replaced, but after a long day someone thought “i’ll remove the cables tomorrow” — But forget’s it immediately. now we have yet another cable taking space, no one dares to remove.

It’s the switches which do nothing, it’s the dead servers everyone forgot. We’ve had many times some servers powered up even for a few years, but no customer on them, completely lost in documentation, simply because after a long day, someone forgot to write it down or a note with the info got lost.

It’s like cleaning, you need to be constantly vigilant and looking for legacy built-up to remove it. It’s the chores, with long term big impact.

HVAC Decays Really Fast Too

It’s the sensors, automation and cooling which decay. For example; HVAC fans. For us here, there’s HVAC fans to replace annually, always a fan or two fails every single year. It’s the air filters for intake air, those need replacement twice a year. These are on-going costs, and on each replacement and upkeep you probably accrue some decay, loosened screws, loosened latches, a dent here or there. It’s all decay, even the piping for HVAC decays like this.

AC Units Decay Only A Bit Slower

It’s the AC units, sometimes they spring a leak, maybe another company’s AC guy mistakes your unit for theirs and leaks all the refrigerant out (this happened in our Helsinki datacenter in Summer 2023 !). Maybe an AC unit’s compressor has design fault causing them to leak over time, but the compressor alone is too expensive to replace compared to the now aged AC unit’s worth / value.

AC Units get old too, refrigerants, electronics, compressor types all get better over time. Our first AC units were all dumb on/off switched, or rarely variable speed compressors. One unit everytime compressor turned on you’d hear a loud “CLUNK!” sound. Modern AC units are all digitally controlled inverter, often screw compressor types with newer refrigerant such as R32. We calculate the lifetime of an AC unit to be 10 years, and that an AC unit has to be paid off in 5 years to be on safe side. They also accrue few hundred euros per year each of maintenance costs (cleaning, checking for leaks etc.).

UPS is the worst!

Legacy type regular UPS units, still almost all DCs use these, utilize lead-acid batteries. These have a lifetime of around 5years, and you have to replace all of them at once, on each module. Each module has a multitude of cells, ie. 144V with 12V batteries is 12 cells. Each of these cells have to be of similar grade, if even one fails, all fails. So all have to be refurbed old, or all have to be new.

LiFePo4 is coming, but they are still rarely used.

Further, certain UPS models just burn out every few years. These are high end, enterprise, big money UPS units. The newer models have built-in guaranteed maintenance plan; Use ECO mode and one of the very expensive PCBs fails every 1-3 years, and it is known by the company. If you use ECO mode your efficiency is in the high 90s, but if you don’t, efficiency is going to be more like in the 70s. Yes, 30% of energy wasted on the UPS; And this is before periodic battery tests etc.

These same UPS units have idle power draw in the kilowatts too, just having them powered on with no devices attached, batteries full.

So in typical UPS setup you have to spend a lot on maintenance, every year. 50-150% of their cost every 10 years just on repairs and maintenance.

That type of UPS is renowned, highly regarded, highly visible. There’s even certain Youtuber who uses from very same lineup of UPS, and the one time they needed it to function it just spew up flames!

All of that is before the other extra power requirements, they need double feeds, double size fuses etc. as well. Very Very Very Expensive to Install and Maintain.

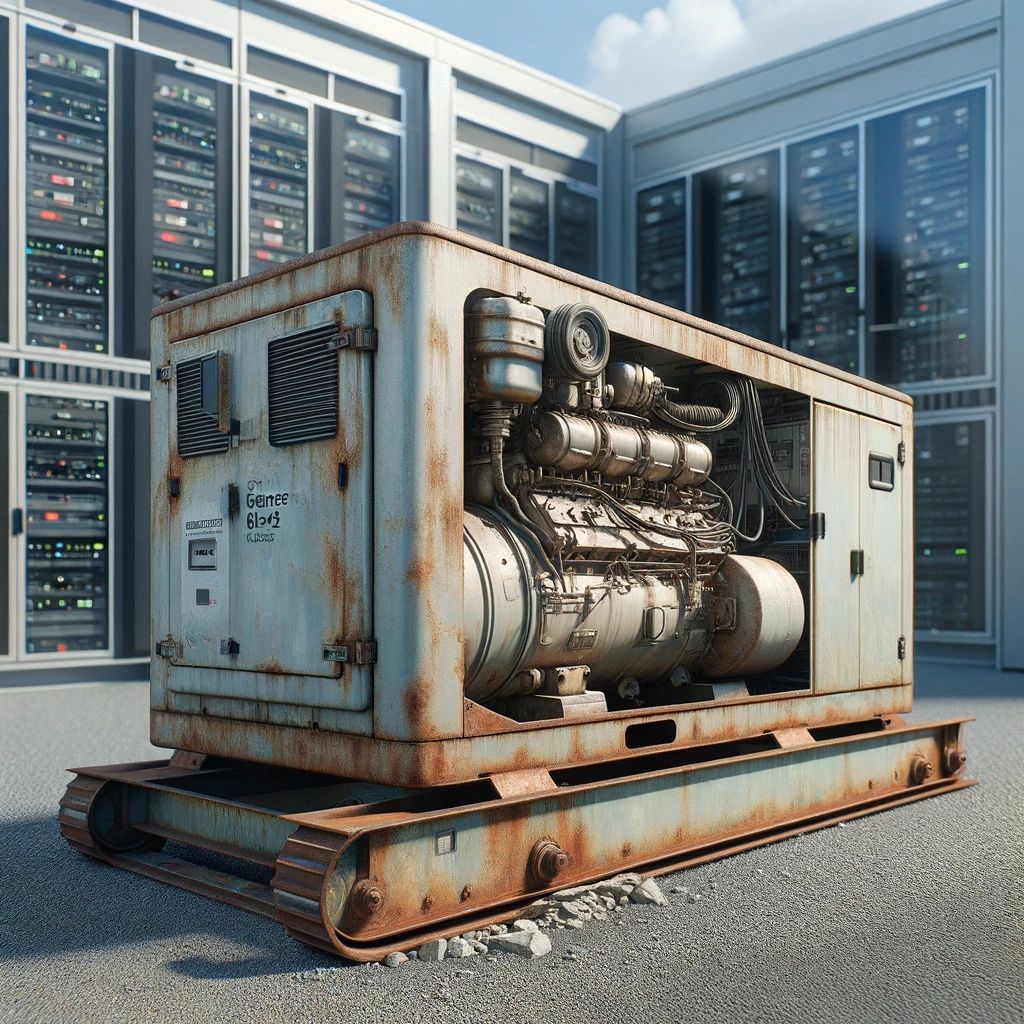

How about diesel generators? Diesel engines barely ever fail!

Oh my, these are expensive too. Depending on the runtime planned for these, ie. how big of a tank you have, how often you run them etc.

Diesel gens need an annual full overhaul of replacement of all liquids and filters, regardless if you used it at all, to maintain their reliable operability. Especially the diesel needs to be replaced, it goes bad over time, and water collects into the oil unless you run the engine to normal operating temp every now and then.

So you have to burn few hundred liters of diesel on each, and these have big oil capacities too — much bigger than vehicle diesel engines compared to their size. and then you also have all the potential other mechanical failures.

Probably every car owner who’s owned more than 1 car, or have had their car sit idle for a while knows how cars really really don’t like not being used constantly. Everything seems a bit off, creeky, weak after a long period of not using. It’s the same thing with diesel gens. Except, with diesel gens you need them to go full power from cold within a minute or so, after not being used for who knows how many months!

At least the diesel you are burning annually just to maintain the generator can be used to power the DC and test your setup… Except, many don’t do this because there’s a high probability of some fuse blowing, UPS giving up etc. you need to essentially cut grid power and hope backups kick-in. Good to test, but it always carries an inherent risk that everything goes down. Still want generators?

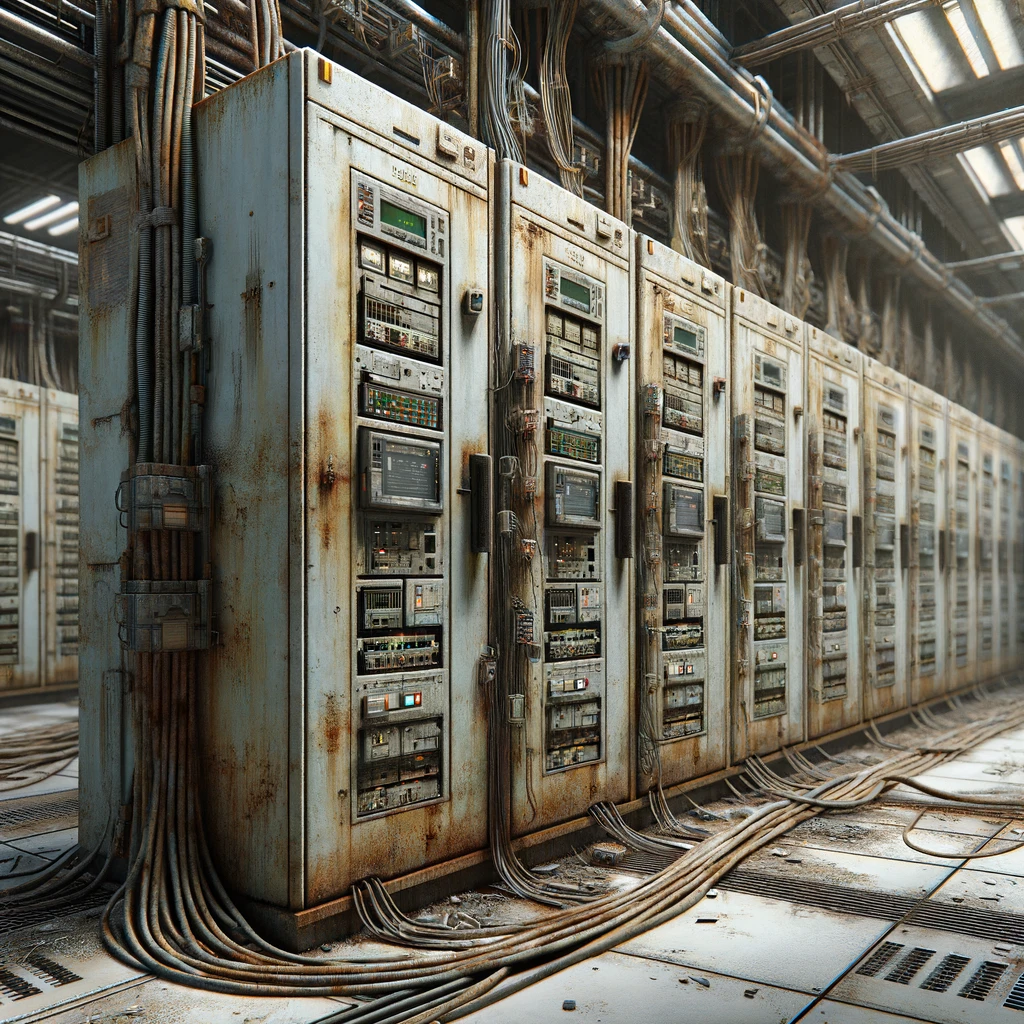

Surely your electrical delivery etc. don’t decay, RIGHT?!?

Yes they do! Due to the high demands of servers the electrical cabinets see heat cycles over and and over again, high load etc. so screws get loose.

Sometimes a older automatic fuse just fails completely or burns out, and you need to replace a PDU. Certain Rittal modular PDUs are very rare on aftermarket probably exactly because they are perishable, consumables. Their fuses over time blow permanently, they infact burn out. We are actually dreading the day we have to rip them out from all our cabinets and replace with new PDUs. That’s hundreds of servers which have to be taken down for probably hours because there’s not really space to install new PDUs without taking old ones out first. and that work has to be done in the hot aisle, very loud and hot place. It’s not even like sauna, it’s like working in a low temp air fryer!

Electrical delivery issues are fortunately rare, but they are just even more dangerous. A loose connection in the electrical cabinet can cause a fire, one datacenter in Helsinki had this issue. Too much load on single phase caused the whole cabinet to burn.

The danger they pose means you want to periodically have your electrician go and check the live cabinets and make sure everything is tight, perhaps take some thermal images too. You should however avoid active cooling because then the fuses don’t work right, they are still thermal based, even the automatic fuses.

Sensors can’t fail!

Oh my! Yes they can! We use 433Mhz band wireless temperature sensors, these need periodical battery replacement. They were not designed for industrial use, so with the default AAA battery options the batteries might not last even a full year. Oh well, that’s just regular maintenance; How about failures? Sometimes a sensor cable or something gets knicked on these, and you don’t get a reading anymore. But more often, radio interference causes false readings and false alerts. So you need to set parameters so that when you get absurdly high reading no alerts neither, otherwise you get alert fatique.

Some monitoring system base stations have EMMC flash on them, and they auto update periodically, causing the EMMC to fail after a few years and you need an replacement base station too.

Give me break, the freakin’ metal server rack cabinets surely don’t age and decay!

Well, while metal is very slow to decay in dry in-door conditions, even they do. We’ve received some cabinets with rust on them! Well, that’s not exactly what we mean tho here.

Older cabinets used cheaper metal blends, softer materials. They are extremely heavy cabinets, and very pliable. While we don’t know the exact load capacity of them, we’d suspect they are lower than modern ones. They also have sharp edges in them and no dimension is rigidly and accurately set.

Infact, the higher end old cabinets from Rittal are worse than off brand cheap old cabinets. With old, we mean the old gray ones. Rittal cabinets have dimensions wrong, they are slightly too wide, just 7-8mm but that’s enough to cause servers with just L brackets designed to fall off. You need to waste a few RU to tie the posts tighter together to avoid your servers dropping from the rack, due to low weight carrying capacity on all of these old rack cabinets. Brand name, or off brand.

It gets even worse with the Rittal racks tho, they are bulky and waste a ton of space, but somehow miraculously manages to be too shallow for long servers at the same time.

Newer racks also tend to have some cable management options built-in, and other features. They are more precisely built, uses space more efficiently, uses better materials and have load carrying ratings which are very high. So you can fit more of them, and using them is much nicer.

You serious?! How about the floor! That’ll live as long as the building!

Not really, raised floor tiles are actually made of wood. Thick wood core, with vinyl on one side, and thin sheet metal on other side is quite typical.

While sides are painted, these exactly are not water tight. You get even a little bit of water, say from washing the floors OR condensation from AC lines, and the water is sucked in and the tile swells. And no, you can’t buy these individually really. So if you want to keep your raised floor looking the same? You better upfront purchase spares to last a few decades or deal with some tiles being whatever you found elsewhere by luck.

Over time you may have needed to modify the tiles too, say add a hole for cable run, and now you need intact one. If you use them like that. Or perhaps move perforated around and they slowly get nicked.

Tho, these do decay very slowly, but even the floor tiles do decay.

Walls, especially concrete are almost eternal!

Nope! Over time you’ve needed to attach stuff, do runs through, then remove something, add again something, things stumble etc.!

Eventually, even on concrete wall you probably need to plaster and paint it. Not quite as often as say in a warehouse, but eventually yea!

No one touches the ceiling!

Well, if you are the top story, roofs need replacement every now and then and can leak. But let’s say you are not, let’s even say you have concrete ceiling like we do, in our Helsinki datacenter.

You need to attach things to the ceiling, say cable trays, HVAC ducting etc. We’ve had quite a few the anchors failing, requiring drilling in new anchors for the cable trays not to fall.

So like walls, these decay too. Neither are very likely going to need a replacement, at least anytime soon, but they may.

We just checked out a 1 story industrial building, which would’ve needed a full wall replacement along with new roof. Probably better to just demolish whole building and make a new one at that point.

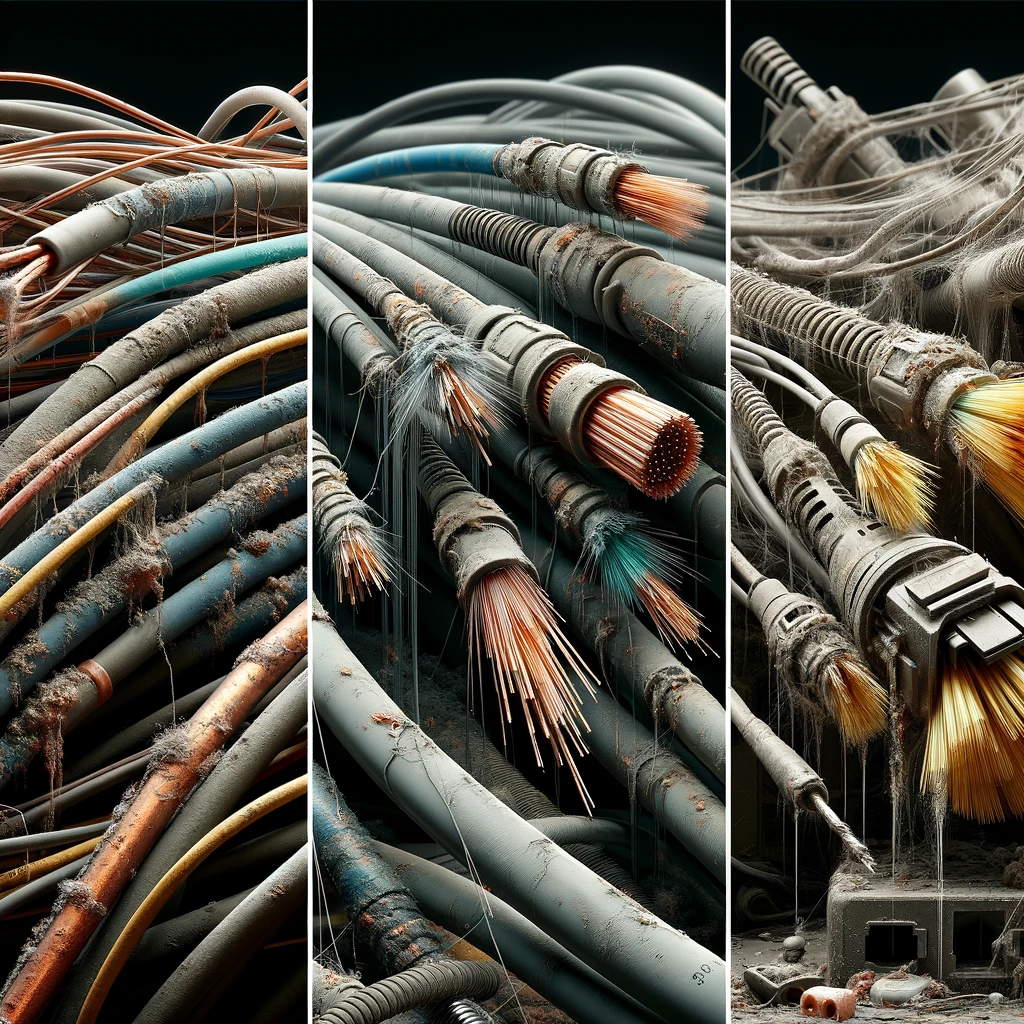

Copper wires are so simple they can’t go bad!

Have you looked at a really old house, factory, warehouse, any really old building with electrical? Yes they do!

Even the modern PVC coated, eventually that sheath fails. We’ve just noticed a extremely increased failure rate with network cables, probably the wire sheathing inside failing. They are only 11-12 year old cables. It’s not many, but considering you normally have zero network cables failing, it’s very significant! At this rate, i would presume 100% of the network cables need to be replaced within next 2 decades. Since it’s cheaper to do pre-emptively, it means soon we will start scrapping old network cables as servers get replaced / maintained, or whatever other changes.

Experientially, we now know why the “big boys” just cut all network cabling and fiber when refurbishing DCs, nothing old is kept, no matter how trivial or small. It’s cheaper just to replace it all, rather than hunt down a failed network cable here or there.

Why have your own datacenter then?

Costs, control, capabilities, scaling etc. There are a lot of reasons we shall dwelve into in another post! So keep tuned and discuss your thoughts below.